Several Clients to Rule Them All

Our framework supports several HTTP clients implementations/classes:

- Socket-based

THttpClientSocket; - Windows WinHTTP API via

TWinHttp; - Windows WinINET API via

TWinINet; - LibCurl API via

TCurlHttp.

Depending on the system, you may want to use either of the classes.

In practice, they are not equivalent. For instance, our THttpClientSocket is the fastest over all - especially on UNIX, when using Unix Sockets instead of TCP to communicate (even it is not true HTTP any more, but it is supported by mORMot classes and also by reverse proxies like nginx).

This blog article will detail the latest modifications in mORMot 2 THttpClientSocket class.

OpenSSL TLS Fixes

During an intensive test phase, some fixes have been applied to the TLS/HTTPS layer. On Windows, it is now easier to load the OpenSSL dll, and the direct SSPI layer did have some fixes applied.

Authentication and Proxy Support

THttpClientSocket has now callbacks for HTTP authentication, using any kind of custom scheme, with built-in support of regular Basic algorithm, or a cross-platform Kerberos algorithm, using SSPI on Windows or the GSSAPI on UNIX.

This class can now connect via a HTTP Proxy tunnel, with proper password authentication if needed.

MultiPart Support

One of the most complex use of HTTP is the ability to cut a stream as multiple parts. Up to now, there was limited support of the MultiPart encoding.

As consequences:

- The

THttpClientSocketclass now accepts not only memory buffers (encoded asRawByteString) but alsoTStream, so that data of any size could be downloaded (GET) or uploaded (POST); - We added the

THttpMultiPartStreamclass for multipart/formdata HTTP POST.

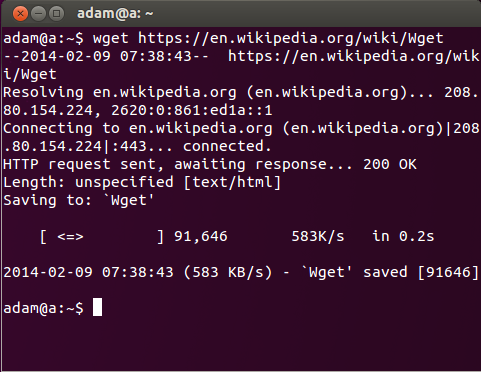

WGet Support

GNU Wget is a free software package for retrieving files using HTTP, HTTPS, FTP and FTPS, the most widely used Internet protocols.

We have implemented its basic features set - with extensions - in THttpClientSocket.WGet() method, as a convenient high-level way of downloading files.

- Direct file download with low resource consumption;

- Can resume any previously aborted file, without restarting from the start - it would be very welcome for huge files;

- Optional redirection from the original URI following the 30x status codes from the server;

- Optional on-the-fly hashing of the content - supporting all

mormot.crypt.corealgorithms; - Optional progress event, via a callback method or built-in console display;

- Optional folder to maintain a list of locally cached files;

- Optional limitation of the bandwidth consumed during download, to reduce the network consumption e.g. on slow connections;

- Optional timeout check for the whole download process (not only at connection level).

We hope these new features will help you work on HTTP, and perhaps switch from other HTTP client libraries if you want to focus on our framework. We are open to any missing feature you may need to implement.