1. Single Responsibility Principle

When you define a class, it shall be designed to implement only one feature. The so-called feature can be seen as an "axis of change" or a "a reason for change".

Therefore:

- One class shall have only one reason that justifies changing its implementation;

- Classes shall have few dependencies on other classes;

- Classes shall be abstract from the particular layer they are running - see Multi-tier architecture.

For instance, a TRectangle object should not have both

ComputeArea and Draw methods defined at once - they

would define two responsibilities or axis of change: the first responsibility

is to provide a mathematical model of a rectangle, and the second is to render

it on GUI.

Splitting classes

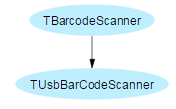

To take an example from real coding, imagine you define a communication

component. You want to communicate, say, with a bar-code scanner peripheral.

You may define a single class, e.g. TBarcodeScanner, supporting

such device connected over a serial port. Later on, the manufacturer deprecates

the serial port support, since no computer still have it, and offer only USB

models in its catalog. You may inherit from TBarcodeScanner, and

add USB support.

But in practice, this new TUsbBarCodeScanner class is difficult

to maintain, since it will inherit from serial-related communication.

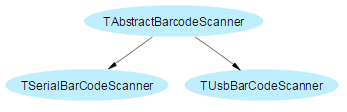

So you start splitting the class hierarchy, using an abstract parent

class:

We may define some virtual abstract methods, which would be

overridden in inherited classes:

type

TAbstractBarcodeScanner = class(TComponent)

protected

function ReadChar: byte; virtual; abstract;

function ReadFrame: TProtocolFrame; virtual; abstract;

procedure WriteChar(aChar: byte); virtual; abstract;

procedure WriteFrame(const aFrame: TProtocolFrame); virtual; abstract;

...

Then, TSerialBarCodeScanner and TUsbBarCodeScanner

classes would override those classes, according to the final

implementation.

In fact, this approach is cleaner. But it is not perfect either, since it

may be hard to maintain and extend. Imagine the manufacturer is using a

standard protocol for communication, whatever USB or Serial connection is used.

You would put this communication protocol (e.g. its state machine, its stream

computation, its delaying settings) in the TAbstractBarcodeScanner

class. But perhaps they would be diverse flavors, in

TSerialBarCodeScanner or TUsbBarCodeScanner, or even

due to diverse models and features (e.g. if it supports 2D or 3D

bar-codes).

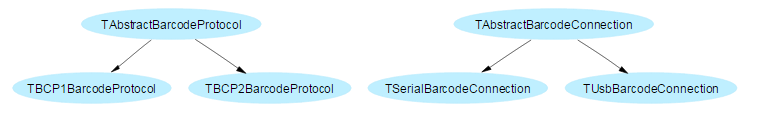

It appears that putting everything in a single class is not a good idea.

Splitting protocol and communication appears to be preferred. Each "axis of

change" - i.e. every aspect which may need modifications - requires its

own class. Then the T*BarcodeScanner classes would

compose protocols and communication classes within a single

component.

Imagine we have two identified protocols (named BCP1 and

BCP2), and two means of communication (serial and USB). So we

would define the following classes:

Then, we may define our final classes and components as such:

type

TAbstractBarcodeConnection = class

protected

function ReadChar: byte; override;

procedure WriteChar(aChar: byte); override;

...

TAbstractBarcodeProtocol = class

protected

fConnection: TAbstractBarcodeConnection;

function ReadFrame: TProtocolFrame; override;

procedure WriteFrame(const aFrame: TProtocolFrame); override;

...

TAbstractBarcodeScanner = class(TComponent)

protected

fProtocol: TAbstractBarcodeProtocol;

fConnection: AbstractBarcodeConnection;

...

And each actual inherited class would initialize the protocol

and connection according to the expected model:

constructor TSerialBarCodeScanner.Create(const aComPort: string; aBitRate: integer); begin fConnection := TSerialBarcodeConnection(aComPort,aBitRate); fProtocol := TBCP1BarcodeProtocol.Create(fConnection); end;

Here, we inject the connection instance to the protocol, since the later may need to read or write some bytes on the wire, when needed.

Another example is how our database classes are defined in

SynDB.pas - see External SQL database access:

- The connection properties feature is handled by

TSQLDBConnectionPropertiesclasses; - The actual living connection feature is handled by

TSQLDBConnectionclasses; - And database requests feature is handled by

TSQLDBStatementinstances using dedicatedNewConnection/ThreadSafeConnection/NewStatementmethods.

Therefore, you may change how a database connection is defined (e.g. add a

property to a TSQLDBConnectionProperties child), and you won't

have to change the statement implementation itself.

Do not mix UI and logic

Another practical "Single Responsibility Principle" smell may appear in your uses clause.

If your data-only or peripheral-only unit starts like this:

unit MyDataModel;

uses Winapi.Windows, mORMot, ...

It would induce a dependency about the Windows Operating System, whereas your data would certainly benefit from being OS-agnostic. Our todays compiler (Delphi or FPC) targets several OS, so coupling our data to the actual Windows unit does show a bad design.

Similarly, you may add a dependency to the VCL, via a reference to the

Forms unit.

If your data-only or peripheral-only unit starts like the following,

beware!

unit MyDataModel;

uses Winapi.Messages, Vcl.Forms, mORMot, ...

If you later want to use FMX, or LCL (from

Lazarus) in your application, or want to use your MyDataModel unit

on a pure server application without any GUI, you are stuck. The upcoming

Windows Nano Server architecture, which targets the cloud and won't

offer any GUI to the server applications, would even be very sensitive to the

dependency chain of the executable.

Note that if you are used to developed in RAD mode, the units generated by

the IDE wizards come with some default references in the uses

clause of the generated .pas file. So take care of not introducing

any coupling to your own business code!

As a general rule, our ORM/SOA framework source code tries to avoid such

dependencies. All OS-specificities are centralized in our

SynCommons.pas unit, and there is no dependency to the VCL when it

is not mandatory, e.g. in mORMot.pas.

Following the RAD approach, you may start from your UI, i.e. defining the needed classes in the unit where you visual form (may be VCL or FMX) is defined. Don't follow this tempting, but dangerous path!

Code like the following may be accepted for a small example (e.g. the one

supplied in the SQlite3\Samples sub-folder of our repository

source code tree), but is to be absolutely avoided for any production ready

mORMot-based application:

interface

uses Winapi.Windows, Winapi.Messages, System.SysUtils, System.Variants, System.Classes, Vcl.Graphics, Vcl.Controls, Vcl.Forms, Vcl.Dialogs, mORMot, mORMotSQLite3;

type TForm1 = class(TForm) procedure FormCreate(Sender: TObject); private fModel: TSQLModel; fDatabase: TSQLRestServerDB; public { Public declarations } end;

implementation

procedure TForm1.FormCreate(Sender: TObject); begin fModel := TSQLModel.Create([TSQLMyOwnRecord],'root'); fDatabase := TSQLRestServerDB.Create(fModel,ChangeFileExt(paramstr(0),'.db')); end;

In your actual project units, when you define an ORM or SOA

class, never include GUI methods within. In fact, the fact that

our TSQLRecord class definitions are common to both Client and

Server sides makes this principle mandatory. You should not have any GUI

related method on the Server side, and the Client side could use the objects

instances with several GUI implementations (Delphi Client, AJAX

Client...).

Therefore, if you want to change the GUI, you won't have to recompile the

TSQLRecord class and the associated database model. If you want to

deploy your server on a Linux box (using e.g. CrossKylix or

FPC as compiler), you could reuse you very same code, since you do not have

reference to the VCL in your business code.

This Single responsibility principle may sound simple and easy to

follow (even obvious), but in fact, it is one of the hardest principles to get

right. Naturally, we tend to join responsibilities in our class definitions.

Our framework architecture will enforce you, by its Client-Server nature and

all its high-level methods involving interface, to follow this

principle, but it is always up to the end coder to design properly his/her

types.

2. Open/Closed Principle

When you define a class or a unit, at the same time:

- They shall be open for extension;

- But closed for modification.

It means that you may be able to extend your existing code, without breaking

its initial behavior.

Some other guidelines may be added, but you got the main idea.

Conformance to this open/closed principle is what yields the greatest benefit of OOP, i.e.:

- Code re-usability;

- Code maintainability;

- Code extendibility.

Following this principle will make your code far away from a regular RAD style. But benefits will be huge.

Applied to our framework units

When designing our ORM/SOA set of units, we tried to follow this principle. In fact, you should not have to modify its implementation. You should define your own units and classes, without the need to hack the framework source code.

Even if Open Source paradigm allows you to modify the supplied code, this shall not be done unless you are either fixing a bug or adding a new common feature. This is in fact the purpose of our http://synopse.info web site, and most of the framework enhancements have come from user requests.

The framework Open Source license - see License - may encourage user contributions in order to fulfill the Open/closed design principle:

- Your application code extends the Synopse mORMot Framework by defining your own classes or event handlers - this is how it is open for extension;

- The main framework units shall remain inviolate, and common to all users - this illustrates the closed for modification design.

As a beneficial side effect, this principle will ensure that your code would be ready to follow the framework updates (which are quite regular). When a new version of mORMot is available, you should be able to retrieve it for free from our web site, replace your files locally, then build a new enhanced version of your application, with the benefit of all included fixes and optimizations. Even the source code repository is available - at http://synopse.info/fossil or from https://github.com/synopse/mORMot - and allows you to follow the current step of evolvment of the framework.

In short, abstraction is the key to peace of mind. All your code shall not depend on a particular implementation.

Open/Close in practice

In order to implement this principle, several conventions could be envisaged:

- You shall better define some abstract classes, then use specific overridden classes for each and every implementation: this is for instance how Client-Server classes were implemented - see Client-Server process;

- All object members shall be declared

privateorprotected- this is a good idea to use Service-Oriented Architecture (SOA) for defining server-side process, and/or make theTSQLRecordpublished properties read-only and using some client-sideconstructorwith parameters; - No singleton nor global variable - ever;

- RTTI is dangerous - that is, let our framework use RTTI functions for its own cooking, but do not use it in your code.

In our previous bar-code scanner class hierarchy, we would therefore define the

type

TAbstractBarcodeScanner = class(TComponent)

protected

fProtocol: TAbstractBarcodeProtocol;

fConnection: AbstractBarcodeConnection;

...

public

property Protocol: TAbstractBarcodeProtocol read fProtocol;

property Connection: AbstractBarcodeConnection read fConnection;

...

In this code, the actual variables are stored as protected

fields, with only getters (i.e. read) in the

public section. There is no setter (i.e.

write) attribute, which may allow to change the

fProtocol/fConnection instances in user code. You can still access

those fields (it is mandatory in your inherited constructors), but user code

should not use it.

As stated above, for our bar-code reader design, having dedicated classes for defining protocol and connection will also help implementing the open/close principle. You would be able to define a new class, combining its own protocol and connection class instances, so it will be Open for extension. But you would not change the behavior of a class, by inheriting it: since protocol and connection are uncoupled, and used via composition in a dedicated class, it will be Closed for modification.

Using the newest sealed directive for a class may ensure that

your class definition would follow this principle. If the class

method or property is sealed, you would not be able to change its

behavior in its inherited types, even if you are tempted to.

No Singleton nor global variables

About the singleton pattern, you should better always avoid it in your code.

In fact, a singleton was a C++ (and Java) hack invented to implement some kind

of global variables, hidden behind a static class definition. They were

historically introduced to support mixed mode of application-wide

initialization (mainly allocate the stdio objects needed to manage

the console), and were abused in business logic.

Once you use a singleton, or a global variable, you would miss most of the benefit of OOP. A typical use of singleton is to register some class instances globally for the application. You may see some framework - or some part of the RTL - which would allow such global registration. But it would eventually void most benefits of proper dependency injection - see Dependency Inversion Principle - since you would not be able to have diverse resolution of the same class.

For instance, if your database properties, or your application configuration are stored within a singleton, or a global variable, you would certainly not be able to use several database at once, or convert your single-user application with its GUI into a modern multi-user AJAX application:

var DBServer: string = 'localhost'; DBPort: integer = 1426;

UITextColor: TColor = clNavy; UITextSize: integer = 12;

Such global variables are a smell of a broken Open/Closed

Principle, since your project would definitively won't be open for

extension. Using a static class variable (as allowed in newer

version of Delphi), is just another way of defining a global variable, just

adding the named scope of the class type.

Even if you do not define some global variable in your code, you may couple

your code from an existing global variable. For instance, defining some

variables with your TMainForm = class(TForm) class defined in the

IDE, then using its global MainForm: TMainForm variable, or the

Application.MainForm property, in your code. You will start to

feel not right, when the unit where your TMainForm is defined

would start to appear in your business code uses clause... just

another global variable in disguise!

In our framework, we tried to never use global registration, but for the

cases where it has been found safe to be implemented, e.g. when RTTI is cached,

or JSON serialization is customized for a given type. All those informations

would be orthogonal to the proper classes using them, so you may find some

global variables in the framework units, only when it is worth it. For

instance, we split TSQLRecord's information into a

TSQLRecordProperties for the shared intangible RTTI values, and

TSQLModelRecordProperties instances, one per

TSQLModel, for all the TSQLModel/TSQLRest specific

settings - see Several Models.

3. Liskov Substitution Principle

Even if her name is barely unmemorable, Barbara Liskov is a great computer scientist, we should better learn from. It is worth taking a look at her presentation at https://www.youtube.com/watch?v=GDVAHA0oyJU

The "Liskov substitution principle" states that, if

TChild is a subtype of TParent, then objects of type

TParent may be replaced with objects of type TChild

(i.e., objects of type TChild may be substitutes for objects of

type TParent) without altering any of the desirable properties of

that program (correctness, task performed, etc.).

The example given by Barbara Liskov was about stacks and queues: if

both have Push and Pop methods, they should not

inherit from a single parent type, since the storage behavior of a stack is

quite the contrary of a queue. In your program, if you start to replace a stack

by a queue, you will meet strange behaviors, for sure. According to proper

top-bottom design flow, both types should be uncoupled. You may

implement a stack class using an in-memory list for storage, or

another stack class using a remote SQL engine, but both would have to

behave like a stack, i.e. according to the last-in first-out (LIFO)

principle. On the other hand, any class implementing a queue type

should follow the the first-in first-out (FIFO) order, whatever kind of storage

is used.

In practical Delphi code, relying on abstractions may be implemented by two means:

- Using only

abstractparentclassvariables when consuming objects; - Using

interfacevariable instead ofclassimplementations.

Here, we do not use inheritance for sharing implementation code, but for

defining an expected behavior. Sometimes, you may break the Liskov

Substitution principle in some implementation methods which would be coded

just to gather some reusable pieces of code, preparing some behavior which may

be used only by some of the subtypes. Such "internal" virtual methods of a

subtype may change the behavior of its inherited method, for the sake of

efficiency and maintainability. But with this kind of implementation

inheritance, which is closer to plumbing than designing, methods should be

declared as protected, and not published as part of the type

definition.

By the way, this is exactly what interface type definitions have

to offer. You can inherit from another interface, and this kind of polymorphism

should strictly follow the Liskov Substitution principle. Whereas the

class types, implementing the interfaces, may use some protected

methods which may break the principle, for the sake of code efficiency.

In order to fulfill this principle, you should:

- Properly name (and comment) your

classorinterfacedefinition: havingPushandPopmethods may be not enough to define a contract, so in this case type inheritance would define the expected expectation - as a consequence, you should better stay away from "duck typing" patterns, and dynamic languages, but rely on strong typing; - Use the "behavior" design pattern, when defining your objects hierarchy -

for instance, if a square may be a rectangle, a

TSquareobject is definitively not aTRectangleobject, since the behavior of aTSquareobject is not consistent with the behavior of aTRectangleobject (square width always equals its height, whereas it is not the case for most rectangles); - Write your tests using abstract local variables (and this will allow test code reuse for all children classes);

- Follow the concept of Design by Contract, i.e. the Meyer's rule defined as "when redefining a routine [in a derivative], you may only replace its precondition by a weaker one, and its postcondition by a stronger one" - use of preconditions and postconditions also enforce testing model;

- Separate your classes hierarchy: typically, you may consider using separated object types for implementing persistence and object creation (this is the common separation between Factory and Repository patterns).

Use parent classes

For our framework, it would signify that TSQLRestServer or

TSQLRestClient instances can be substituted to a

TSQLRest object. Most ORM methods expect a TSQLRest

parameter to be supplied.

For instance, you may write:

var anyRest: TSQLRest;

ID: TID;

rec1,rec2: TSQLMyRecord;

...

ID := anyRest.Add(rec1,true);

rec2 := TSQLMyRecord.Create(anyRest,ID);

...

And you may set any kind of actual class instance to anyRest,

either a local stored database engine, or a HTTP remote access:

anyRest := TSQLRestServerDB.Create(aModel,'mydatabase.db');

anyRest := TSQLHttpClient.Create('1.2.3.4','8888',aModel,false);

You may even found in the dddInfraSettings.pas unit a powerful

TRestSettings.NewRestInstance() method which is able to

instantiate the needed TSQLRest inherited class from a set of JSON

settings, i.e. either a TSQLHttpClient, or a local

TSQLRestServerFullMemory, or a TSQLRestServerDB - the

later either with a local SQlite3 database, an external SQL engine, or

an external NoSQL/MongoDB database.

Your code shall refer to abstractions, not to implementations. By using only methods and properties available at classes parent level, your code won't need to change because of a specific implementation.

I'm your father, Luke

You should note that, in the Liskov substitution principle

definition, "parent" and "child" are no absolute. Which actual

class is considered as "parent" may depend on the context use.

Most of the time, the parent may be the highest class in the hierarchy. For instance, in the context of a GUI application, you may use the most abstract class to access the application data, may it be stored locally, or remotely accessed over HTTP.

But when you initialize the class instance of a local stored

server, you may need to setup the actual data storage, e.g. the file name or

the remote SQL/NoSQL settings. In this context, you would need to access the

"child" properties, regardless of the "parent" abstract use which would take

care later on in the GUI part of the application.

Furthermore, in the context of data replication, server side or client side

would have diverse behavior. In fact, they may be used as master or slave

database, so in this case, you may explicitly define server or client

class in your code. This is what our ORM does for its master/slave

replication - see Master/slave replication.

If we come back to our bar-code scanner sample, most of your GUI code may

rely on TAbstractBarcodeScanner components. But in the context of

the application options, you may define the internal properties of each "child"

class - e.g. the serial or USB port name, so in this case, your new "parent"

class may be either TSerialBarCodeScanner or

TUsbCodeScanner, or even better the

TSerialBarcodeConnection or TUsbBarcodeConnection

properties, to fulfill Single Responsibility principle.

Don't check the type at runtime

Some patterns shall never appear in your code. Otherwise, code refactoring should be done as soon as possible, to let your project be maintainable in the future.

Statements like the following are to be avoided, in either the parents or the child's methods:

procedure TAbstractBarcodeScanner.SomeMethod; begin if self is TSerialBarcodeScanner then begin .... end else if self is TUsbBarcodeScanner then ...

Or, in its disguised variation, using an enumerated item:

case fProtocol.MeanOfCommunication of meanSerial: begin ... end; meantUsb: ...

This later piece of code does not check self, but the

fProtocol protected field. So even if you try to implement the

Single Responsibility principle, you may still be able to break

Liskov Substitution!

Note that both patterns will eventually break the Single Responsibility

principle: each behavior shall be defined in its own child

class methods. As the Open/Close principle would also be

broken, since the class won't be open for extension, without touching the

parent class, and modify the nested if self is T* then ... or

case fProtocol.* of ... expressions.

Partially abstract classes

Another code smell may appear when you define a method which will stay

abstract for some children, instantiated in the project. It would

imply that some of the parent class behavior is not implemented at

this particular hierarchy level. So you would not be able to use all the

parent's methods, as would be expected by the Liskov Substitution

principle.

Note that the compiler will complain for it, hinting that you are creating a

class with abstract methods. Never ignore such hints - which may benefit for

being handled as errors at compilation time, IMHO. The (in)famous

"Abstract Error" error dialog, which may appear at runtime, would

reflect of this bad code implementation.

A more subtle violation of Liskov may appear if you break the expectation of the parent class. The following code, which emulates a bar-code reader peripheral by sending the frame by email for debugging purpose (why not?), clearly fails the Design by Contract approach:

TEMailEmulatedBarcodeProtocol = class(TAbstractBarcodeProtocol)

protected

function ReadFrame: TProtocolFrame; override;

procedure WriteFrame(const aFrame: TProtocolFrame); override;

...

function TEMailEmulatedBarcodeProtocol.ReadFrame: TProtocolFrame;

begin

raise EBarcodeException.CreateUTF8('%.ReadFrame is not implemented!',[self]);

end;

procedure TEMailEmulatedBarcodeProtocol.WriteFrame(const aFrame: TProtocolFrame);

begin

SendEmail(fEmailNotificationAddress,aFrame.AsString);

end;

We expected this class to fully implement the

TAbstractBarcodeProtocol contract, whereas calling

TEMailEmulatedBarcodeProtocol.ReadFrame would not be able to read

any data frame, but would raise an exception. So we can not use this

TEMailEmulatedBarcodeProtocol class as replacement to any other

TAbstractBarcodeProtocol class, otherwise it would fail at

runtime.

A correct implementation may perhaps to define a

TFakeBarcodeProtocol class, implementing all the parent methods

via a set of events or some text-based scenario, so that it would behave just

like a correct TAbstractBarcodeProtocol class, in the full extend

of its expectations.

Messing units dependencies

Last but not least, if you need to explicitly add child classes units to the

parent class unit uses clause, it looks like if you just broke the

Liskov Substitution principle.

unit AbstractBarcodeScanner;

uses SysUtils, Classes, SerialBarcodeScanner; // Liskov says: "it smells"! UsbBarcodeScanner; // Liskov says: "it smells"! ...

If your code is like this, you would have to remove the reference to the inherited classes, for sure.

Even a dependency to one of the low-level implementation detail is to be avoided:

unit AbstractBarcodeScanner;

uses Windows, SysUtils, Classes, ComPort; ...

Your abstract parent class should not be

coupled to a particular Operating System, or a mean of communication,

which may not be needed. Why would you add a dependency to raw RS-232

communication protocol, which is very likely to be deprecated.

One way of getting rid of this dependency is to define some abstract types

(e.g. enumerations or simple structures like record), which would

then be translated into the final types as expected by the

ComPort.pas or Windows.pas units. Consider putting

all the child classes dependencies at constructor level, and/or

use class composition via the Single Responsibility

principle so that the parent class definition would not be

polluted by implementation details of its children.

You my also use a registration list, maintained by the parent unit, which may be able to register the classes implementing a particular behavior at runtime. Thanks to Liskov, you would be able to substitute any parent class by any of its inherited implementation, so defining the types at runtime only should not be an issue.

Practical advantages

The main advantages of this coding pattern are the following:

- Thanks to this principle, you will be able to stub or

mock an interface or a

class- see Interfaces in practice: dependency injection, stubs and mocks - e.g. uncouple your object persistence to the actual database it runs on: this principle is therefore mandatory for implementing unitary testing to your project; - Furthermore, testing would be available not only at isolation level (testing each child class), but also at abstracted level, i.e. from the client point of view - you can have implementation which behave correctly when tested individually, but which failed when tested at higher level if the Liskov principle was broken;

- As we have seen, if this principle is violated, the other principles are

very likely to be also broken - e.g. the parent class would need to be modified

whenever a new derivative of the base class is defined (violation of the

Open/Close principle), or your

classtypes may implement more than one behavior at a time (violation of the Single Responsibility principle); - Code re-usability is enhanced by method re-usability: a method defined at a parent level does not require to be implemented for each child.

The SOA and ORM concepts, as implemented by our framework, try to be

compliant with the Liskov substitution principle. It is true at

class level for the ORM, but a more direct Design by

Contract implementation pattern is also available, since the whole SOA

stack involves a wider usage of interfaces in your projects.

4. Interface Segregation Principle

This principle states that once an interface has become too 'fat' it shall be split into smaller and more specific interfaces so that any clients of the interface will only know about the methods that pertain to them. In a nutshell, no client should be forced to depend on methods it does not use.

As a result, it will help a system stay decoupled and thus easier to re-factor, change, and redeploy.

Consequence of the other principles

Interface segregation should first appear at class

level. Following the Single Responsibility principle, you are very

likely to define several smaller classes, with a small extent of methods. Then

use dedicated types of class, relying on composition to expose its own higher

level set of methods.

The bar-code class hierarchy illustrates this concept. Each

T*BarcodeProtocol and T*BarcodeConnection class will

have its own set of methods, dedicated either to protocol handling, or data

transmission. Then the T*BarCodeScanner classes will

compose those smaller classes into a new class, with a single event

handler:

type TOnBarcodeScanned = procedure(Sender: TAbstractBarcodeScanner; const Barcode: string) of object;

TAbstractBarcodeScanner = class(TComponent) ... property OnBarcodeScanned: TOnBarcodeScanned read fOnBarcodeScanned write fOnBarcodeScanned; ...

This single OnBarcodeScanned event will be the published

property of the component. Both protocol and connection details would be hidden

within the internal classes. The final application would use this event, and

react as expected, without actually knowing anything about the implementation

details.

Using interfaces

The SOA part of the framework allows direct use of interface

types to implement services. This great Client-Server SOA implementation

pattern - see Server side Services - helps decoupling all services to

individual small methods. In this case also, the stateless used design will

also reduce the use of 'fat' session-related processes: an object life time can

be safely driven by the interface scope.

By defining Delphi interface instead of plain

class, it helps creating small and business-specific contracts,

which can be executed on both client and server side, with the same exact

code.

Since the framework makes interface consumption and publication very easy,

you won't be afraid of exposing your implementation classes as small pertinent

interface.

For instance, if you want to publish a third-party API, you may consider

publishing dedicated interfaces, each depending on every API consumer

expectations. So your main implementation logic won't be polluted by how the

API is consumed, and, as correlative, the published API may be closer to each

particular client needs, without been polluted by the other client needs. DDD

would definitively benefit for Interface Segregation, since this

principle is the golden path to avoid domain leaking - see DTO and

Events to avoid domain leaking.

5. Dependency Inversion Principle

Another form of decoupling is to invert the dependency between high and low level of a software design:

- High-level modules should not depend on low-level modules. Both should depend on abstractions;

- Abstractions should not depend upon details. Details should depend upon abstractions.

The goal of the dependency inversion principle is to decouple high-level components from low-level components such that reuse with different low-level component implementations becomes possible. A simple implementation pattern could be to use only interfaces owned by, and existing only with the high-level component package.

This principle results in Inversion Of Control (aka IoC): since you rely on the abstractions, and try not to depend upon concretions (i.e. on implementation details), you should first concern by defining your interfaces.

Upside Down Development

In conventional application architecture, lower-level components are designed to be consumed by higher-level components which enable increasingly complex systems to be built. This design limits the reuse opportunities of the higher-level components, and certainly breaks the Liskov substitution principle.

For our bar-code reader sample, we may be tempted to start from the final

TSerialBarcodeScanner we need in our application. We were asked by

our project leader to allow bar-code scanning in our flagship application, and

the extent of the development has been reduced to support a single model of

device, in RS-232 mode - this may be the device already owned by our end

customer.

This particular customer may have found some RS-232 bar-code relics from the 90s in its closets, but, as an experience programmer, you know that the next step would be to support USB, in a very close future. All this bar-code reading stuff will be marketized by your company, so it is very likely than another customer would very soon ask for using its own brand new bar-code scanners... which would support only USB.

So you would modelize your classes as above.

Even if the TUsbBarCodeScanner - and its correlative

TUsbBarcodeConnection class - is not written, nor tested (you do

not even have an actual USB bar-code scanner to do proper testing yet!), you

are prepared for it.

When you would eventually add USB support, the UI part of the application won't have to be touched. Just implementing your new inherited class, leveraging all previous coding. Following Dependency Inversion from the beginning would definitively save your time. Even in an Agile kind of process - where "Responding to change" is most valuable - the small amount of work on implementing first from the abstraction with the initial implementation would be very beneficial.

In fact, this Dependency Inversion principle is a prerequisite for proper Test-Driven Design. Following this TDD pattern, you first write your test, then fail your test, then write the implementation. In order to write the test, you need the abstracted interface of the feature to be available. So you would start from the abstraction, then write the concretion.

Injection patterns

In other languages (like Java or .Net), various patterns such as Plug-in, Service Locator, or Dependency Injection are then employed to facilitate the run-time provisioning of the chosen low-level component implementation to the high-level component.

Our Client-Server architecture facilitates this decoupling pattern for its

ORM part, and allows the use of native Delphi interface

to call services from an abstract factory, for its SOA part.

A set of dedicated classes, defined in mORMot.pas, allows to

leverage IoC: see e.g. TInjectableObject,

TInterfaceResolver,

TInterfaceResolverForSingleInterface and

TInterfaceResolverInjected, which may be used in conjunction with

TInterfaceStub or TServiceContainer high-level

mocking and SOA features of the framework - see Interfaces in practice:

dependency injection, stubs and mocks and Client-Server services via

interfaces.

Feedback needed

Feel free to comment this article in our forum, as usual!